When you start working with data science and machine learning you notice that there’s a important thing you will miss more often than not while trying to solve a problem: the data!

Finding the right type of data for a specific problem is not easy, and despite the huge amount of collected and even processed datasets over the internet, many times you'll be forced to extract information from scratch in the messy, wild web. That’s where web scraping comes in.

For the last few weeks I’ve been researching about web scraping with Python and Scrapy, and decided to apply it to a Contact Extractor, a bot that aims to crawl some websites and collect emails and other contact information given some tag search.

There are many free Python packages out there focused on web scraping, crawling and parsing, like Requests, Selenium and Beautiful Soup, so if you want, take time to look into some of them and decide which one fits you the best. This article gives a brief introduction on some of those main libraries.

For me, I've been using Beautiful Soup for a while, mostly to parse HTML, now combining it to Scrapy's environment, since it's a powerful multi-purpose scraping and web crawling framework. To learn about it's features, I suggest going through some youtube tutorials and reading its documentation.

Although this series of articles intend to work with phone numbers and perhaps other forms of contact information as well, in this first one we'll stick just with email extraction to keep things simple. The goal of this first scraper is to search google for many websites, given a certain tag, parse each one looking for emails and register them inside a data frame.

So, let’s suppose you want to get a thousand emails related to Real State agencies, you could type a few different tags and have those mails stored in a CSV file in your computer. That would be amazing to help building a quick mailing list, for later on sending many emails at once.

This problem will be tackled in 5 steps:

1 — Extract websites from google with googlesearch

2— Make a regex expression to extract emails

3 — Scrape websites using a Scrapy Spider

4 — Save those emails in a CSV file

5 — Put everything together

This article will present some code, but feel free to skip it if you’d like, I’ll try to make it as intuitive as possible. Let’s go over the steps.

1 — Extract websites from google with googlesearch

In order to extract URLs from a tag, we’re going to make use of googlesearch library. This package has a method called search, which, given the query, a number of websites to look for and a language, will return the links from a Google search. But before calling this function let's import a few modules:

import logging

import os

import pandas as pd

import re

import scrapy

from scrapy.crawler import CrawlerProcess

from scrapy.linkextractors.lxmlhtml import LxmlLinkExtractor

from googlesearch import search

logging.getLogger('scrapy').propagate = FalseThat last line is used to avoid getting too many logs and warnings when using Scrapy inside Jupyter Notebook.

So let’s make a simple function with search:

def get_urls(tag, n, language):

urls = [url for url in search(tag, stop=n, lang=language)][:n]

return urls

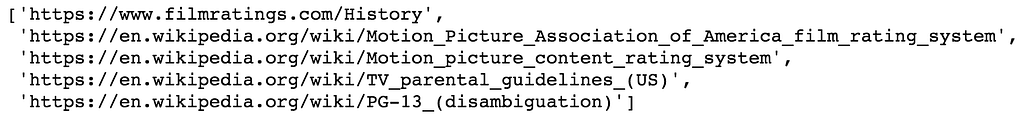

This piece of code returns a list of URL strings. Let's check it out:

get_urls('movie rating', 5 , 'en')

Cool. Now that URL list (call it google_urls) is going to work as the input for our Spider, which will read the source code of each page and look for emails.

2 — Make a regex expression to extract emails

If you are interested in text handling, I highly recommend that you get familiarised with regular expressions (regex), because once you master them, it becomes quite easy to manipulate text, looking for patterns in strings — to find, extract and replace parts of a text — based on a sequence of characters. For instance, extracting emails could be performed applying the findall method, like follows:

mail_list = re.findall(‘\w+@\w+\.{1}\w+’, html_text)The expression ‘\w+@\w+\.{1}\w+’ used here could be translated to something like this:

“Look for every piece of string that starts with one or more letters, followed by an at sign (‘@’), followed by one or more letters with a dot in the end. After that it should have one or more letters again.”

In case you'd like to learn more about regex, there are some great videos on youtube, including this introduction by Sentdex, and the documentation can help you getting started with.

Of course the expression above could be improved to avoid unwanted emails or errors in the extraction, but we’ll take it as good enough for now.

3 — Scrape websites using a Scrapy Spider

A simple Spider is composed of a name, a list of URLs to start the requests and one or more methods to parse the response. Our complete Spider look's like this:

class MailSpider(scrapy.Spider):

name = 'email'

def parse(self, response):

links = LxmlLinkExtractor(allow=()).extract_links(response)

links = [str(link.url) for link in links]

links.append(str(response.url))

for link in links:

yield scrapy.Request(url=link, callback=self.parse_link)

def parse_link(self, response):

for word in self.reject:

if word in str(response.url):

return

html_text = str(response.text)

mail_list = re.findall('\w+@\w+\.{1}\w+', html_text)

dic = {'email': mail_list, 'link': str(response.url)}

df = pd.DataFrame(dic)

df.to_csv(self.path, mode='a', header=False)

df.to_csv(self.path, mode='a', header=False)Breaking it down, the Spider takes a list of URLs as input and read their source codes one by one. You may have noticed that more than just looking for emails inside the URLs, we're also searching for links. That's because in most websites contact information is not going to be found straight in the main page, but rather in a contact page or so. Therefore, in the first parse method we’re running a link extractor object (LxmlLinkExtractor), that checks for new URLs inside a source. Those URLs are passed to the parse_link method — this is the actual method where we apply our regex findall to look for emails.

The piece of code below is the one responsible for sending links from one parse method to another. This is accomplished by a callback argument that defines to which method the request URL must be sent to.

yield scrapy.Request(url=link, callback=self.parse_link)

Inside parse_link we can note a for loop using the variable reject. That's a list of words to be avoided while looking for web addresses. For instance, if I’m looking for tag='restaurants in Rio de Janeiro', but don’t want to come across facebook or twitter pages, I could include those words as bad words when creating the Spider's process:

process = CrawlerProcess({'USER_AGENT': 'Mozilla/5.0'})

process.crawl(MailSpider, start_urls=google_urls, path=path, reject=reject)

process.start()The google_urls list will be passed as an argument when we call the process method to run the Spider, path defines where to save the CSV file and reject works as described above.

Using processes to run spiders is a way to implement Scrapy inside Jupyter Notebooks. If you run more than one Spider at once, Scrapy will speed things up using multi-processing. That's a big advantage of choosing this framework against its alternatives.

4 — Save those emails in a CSV file

Scrapy has also it’s own methods to store and export the extracted data, but in this case I’m just using my own (probably slower) way, with pandas’ to_csv method. For each website scraped, I make a data frame with columns: [email, link], and append it to a previously created CSV file.

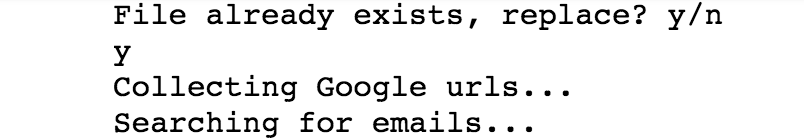

Here I’m just defining two basic helper functions to create the new CSV file and, in case that file already exists, it asks if we’d like to overwrite.

def ask_user(question):

response = input(question + ' y/n' + '\n')

if response == 'y':

return True

else:

return False

def create_file(path):

response = False

if os.path.exists(path):

response = ask_user('File already exists, replace?')

if response == False: return

with open(path, 'wb') as file:

file.close()

5 — Put everything together

Finally we're building the main function where everything works together. First it writes an empty data frame to a new CSV file, than get google_urls using get_urls function and start the process executing our Spider.

def get_info(tag, n, language, path, reject=[]):

create_file(path)

df = pd.DataFrame(columns=['email', 'link'], index=[0])

df.to_csv(path, mode='w', header=True)

print('Collecting Google urls...')

google_urls = get_urls(tag, n, language)

print('Searching for emails...')

process = CrawlerProcess({'USER_AGENT': 'Mozilla/5.0'})

process.crawl(MailSpider, start_urls=google_urls, path=path, reject=reject)

process.start()

print('Cleaning emails...')

df = pd.read_csv(path, index_col=0)

df.columns = ['email', 'link']

df = df.drop_duplicates(subset='email')

df = df.reset_index(drop=True)

df.to_csv(path, mode='w', header=True)

return df

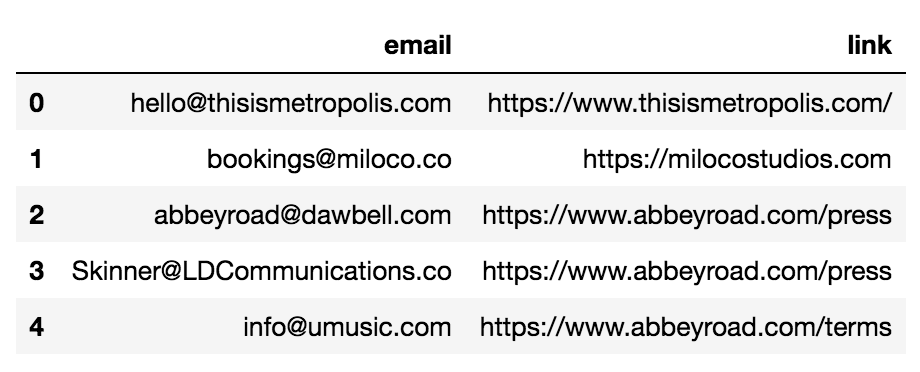

Ok, let’s test it and check the results:

bad_words = ['facebook', 'instagram', 'youtube', 'twitter', 'wiki']

df = get_info('mastering studio london', 300, 'pt', 'studios.csv', reject=bad_words)

As I allowed an old file to be replaced, studios.csv was created with the results. Besides storing the data in a CSV file, our final function returns a data frame with the scraped information.

df.head()

And there it is! Even though we have a list with thousands of emails, you'll see that among them some seem weird, some are not even emails, and probably most of them ends up being useless for us. So the next step is going to be finding ways to filter out a great portion of non-relevant emails and looking for strategies to keep only those which could be of some use. Maybe using Machine Learning? I hope so.

That’s all for this post. This article was written as the first of a series using Scrapy to build a Contact Extractor. Thank you if you kept reading until the end. For now, I'm happy to write my second post. Feel free to let any comments, ideas or concerns. And if you enjoyed it, don’t forget to clap! See you next post.

Web scraping to extract contact information— Part 1: Mailing Lists was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.